UK government programmers trialed AI coding assistants from Microsoft, GitHub, and Google – here's what they found

Software developers are reporting big efficiency gains from AI coding tools

The UK government has revealed its push to encourage AI use is delivering marked benefits for developers.

As part of an AI trial across government, more than 1,000 tech workers across 50 different departments trialed AI coding assistants from Microsoft, GitHub Copilot, and Google Gemini Code Assist between November 2024 and February this year.

Figures published following a review of the scheme shows developers are saving around one hour each day, equivalent to around 28 working days a year.

Technology minister Kanishka Narayan said the trial scheme highlights the benefits of rolling the technology out across government.

"For too long, essential public services have been slow to use new technology – we have a lot of catching up to do," said Narayan.

"These results show that our engineers are hungry to use AI to get that work done more quickly, and know how to use it safely."

Most of the time savings from the AI assistants came from using them to write first drafts of code that experts then edit, or using them to review existing code.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

With only 15% of code generated by AI assistants being used without any edits, engineers have been taking care to check and correct outputs where needed, the government said.

The trial appears to have been popular with government coders, with 72% of users agreeing they offered good value for their organization. Nearly two-thirds (65%) reported that they were completing tasks more quickly and 56% said they could solve problems more efficiently.

Notably, more than half (58%) of participants said they would prefer not to return to working without these solutions.

Tara Brady, resident of Google Cloud EMEA, said the tech giant is “thrilled to see the positive impact” its AI coding tool delivered for government workers.

"This landmark trial, the largest of its kind for Gemini Code Assist in the UK public sector, underscores the transformative potential of AI in enhancing productivity and problem-solving for coding professionals, and highlights the successful collaboration stemming from Google Cloud’s Strategic Partnership Agreement with the UK government."

Prime minister Keir Starmer has been highly vocal about the government’s plans to roll out AI across government and public services since taking office. Downing Street hopes to save taxpayers more than £45 billion through the use of AI.

Questions remain over government AI coding gains

Martin Reynolds, field CTO at software delivery platform Harness, welcomed the move but questioned whether the plans go far enough. A key factor here, he noted, lies in the volume of manual remediation required by developers using AI-generated code.

"While AI is creating an initial velocity boost, 85% of government AI-generated code still needs to be manually edited by engineers,” he said.

“That's before it enters the more manual downstream stages of delivery, such as testing, security scanning, deployment, and continuous verification, which are essential to getting code into production safely and reliably," Reynolds added.

The quality of AI-generated code has become a recurring talking point in recent months. A recent study from Fastly, for example, found developers often find themselves manually remediating faulty code, which ultimately negates the time savings delivered by the technology.

Nigel Douglas, head of developer relations at software supply chain security firm Cloudsmith, also voiced concerns about potential security issues, saying there's not much evidence of secure-by-design thinking.

Given the critical nature of the work conducted by developers in key government departments, this should be a key focus moving forward.

“Without security-aware tooling or policy enforcement, you can easily see over-enthusiastic use of AI coding assistants unknowingly introducing vulnerabilities into one of this country’s most critical software ecosystems," he said.

"We’re getting past the point where it’s acceptable for software development teams to ‘hope for the best’ - you’ve got to be able to verify the provenance of the ingredients flowing through your software supply chain and into production systems, and you need tools to help respond to newly emerging threats that may impact what you’ve already deployed.”

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

MORE FROM ITPRO

- Not all software developers are sold on AI coding tools

- AI coding tools are booming – and developers in this one country are by far the most frequent users

- AI coding tools are finally delivering results for enterprises

Emma Woollacott is a freelance journalist writing for publications including the BBC, Private Eye, Forbes, Raconteur and specialist technology titles.

-

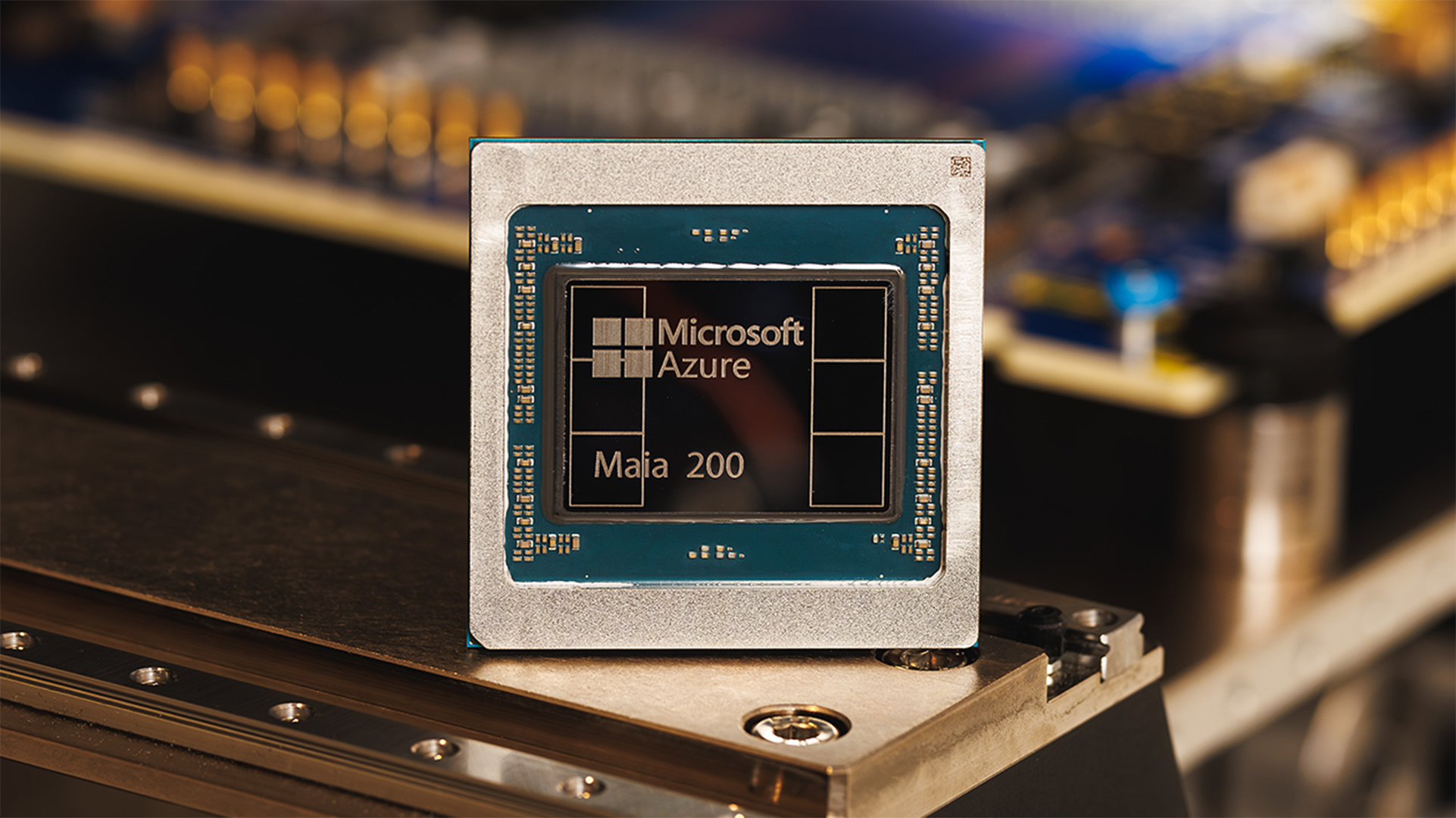

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

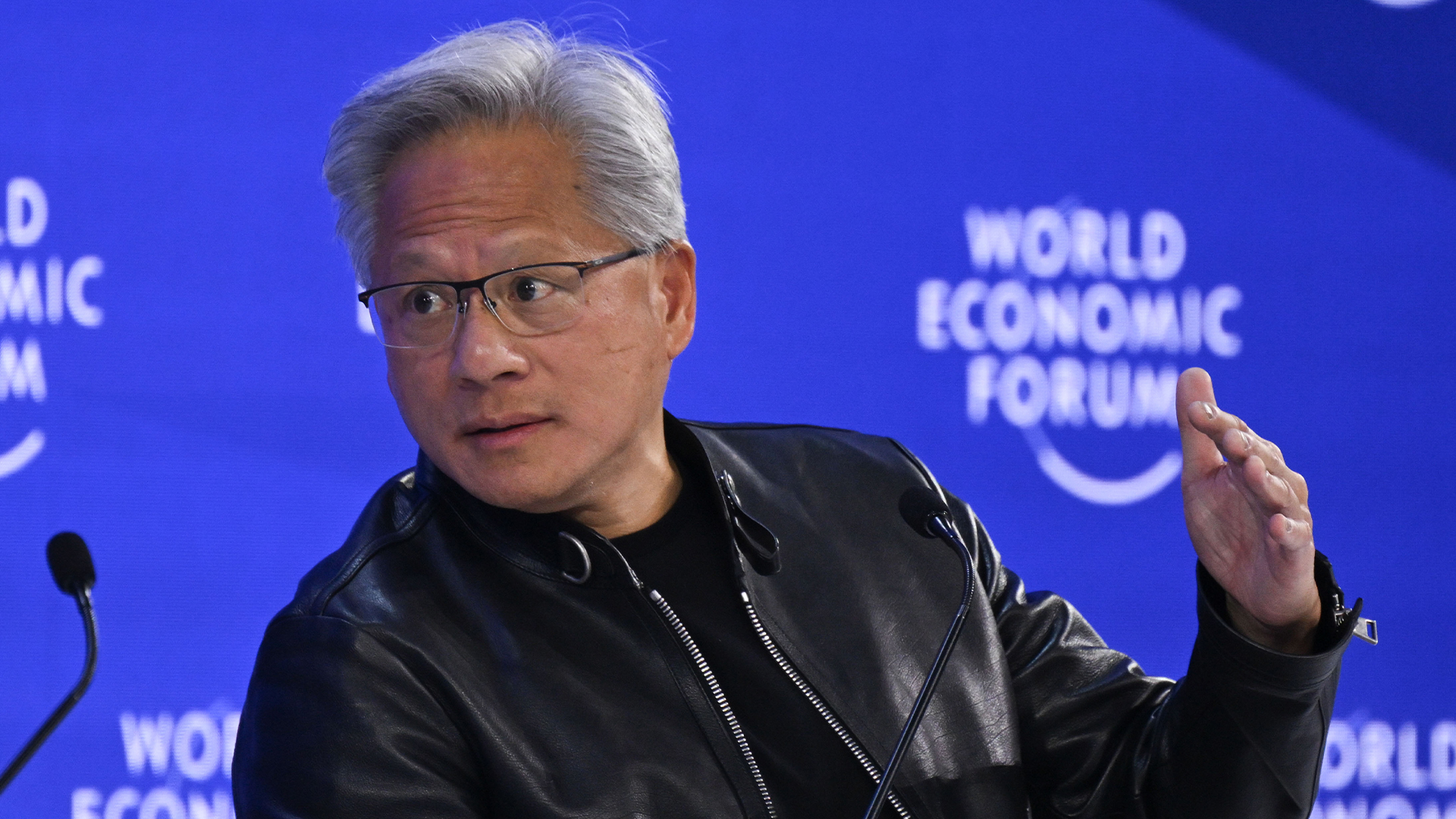

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

Not keen on Microsoft Copilot? Don’t worry, your admins can now uninstall it – but only if you've not used it within 28 days

Not keen on Microsoft Copilot? Don’t worry, your admins can now uninstall it – but only if you've not used it within 28 daysNews The latest Windows 11 Insider Preview will include a policy for removing the app entirely — but only in certain conditions

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

New Gemini features are coming to Gmail, but don't worry, you can switch them off – Google says they're not a 'forced requirement' and users can opt for the classic version

New Gemini features are coming to Gmail, but don't worry, you can switch them off – Google says they're not a 'forced requirement' and users can opt for the classic versionNews Google has announced plans for deeper AI integration within Gmail to help users automate inboxes, here's how to turn the features off.

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

‘1 engineer, 1 month, 1 million lines of code’: Microsoft wants to replace C and C++ code with Rust by 2030 – but a senior engineer insists the company has no plans on using AI to rewrite Windows source code

‘1 engineer, 1 month, 1 million lines of code’: Microsoft wants to replace C and C++ code with Rust by 2030 – but a senior engineer insists the company has no plans on using AI to rewrite Windows source codeNews Windows won’t be rewritten in Rust using AI, according to a senior Microsoft engineer, but the company still has bold plans for embracing the popular programming language