Anthropic announces Claude Opus 4.5, the new AI coding frontrunner

The new frontier model is a leap forward for the firm across agentic tool use and resilience against attacks

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Anthropic has announced Claude Opus 4.5, its most advanced model to date and the new industry leader for AI code generation.

The AI company claims the new model is a strong contender for all agentic workflows, including code generation and autonomous computer use.

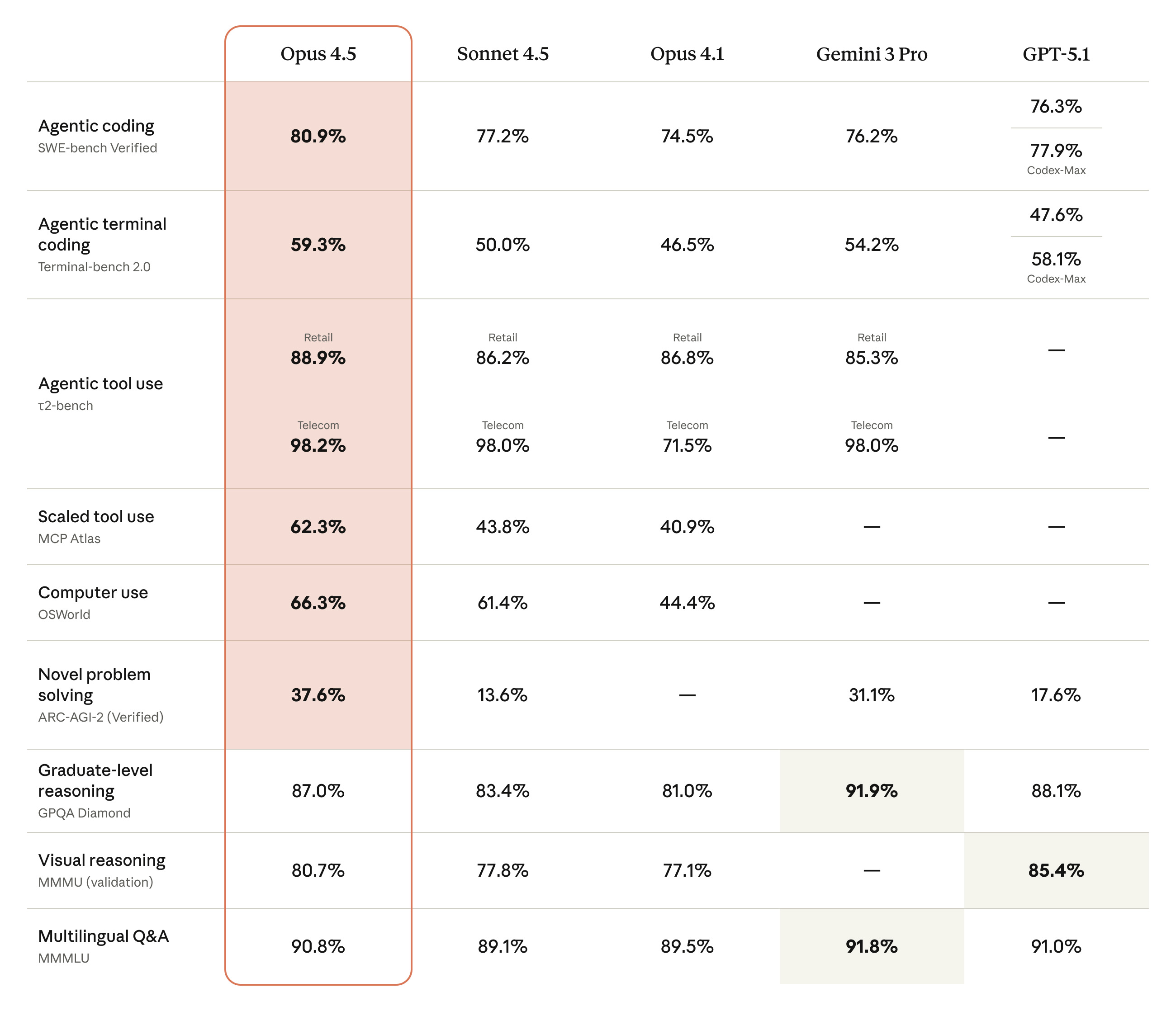

Opus 4.5 scored 80.9% in SWE-Bench Verified, cementing it as the new state of the art model for code generation.

SWE-Bench Verified is one of the most rigorous for testing the agentic coding capabilities of AI models, with models tested according to the benchmark are presented with real-world coding problems taken from open source GitHub repositories.

In comparison, GPT-5.1 Codex Max scored 77.9% and Gemini 3 Pro, Google’s latest frontier model, scored 76.2%.

Claude Sonnet 4.5 has, to date, been widely praised as the best AI model for generating code across a variety of programming languages.

In addition to its raw performance, Opus 4.5 offers developers more choice in how to approach a problem.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Via the Claude API, developers can use a new ‘effort’ parameter to determine how many tokens they want the model to use for a given task. This affects how long the output will take and how expensive it will be.

In tests, Opus 4.5 set to ‘medium’ was able to match Claude Sonnet 4.5 scores on SWE-bench Verified while using 76% fewer output tokens.

A big leap forward for Anthropic

Aside from its coding capabilities, Anthropic underlined the overall improvement Opus 4.5 brings to various enterprise tasks.

For example, the model is capable of complex information retrieval, agentic tool use, and deep analysis, as well as Excel automation.

Across agentic tool use benchmarks, Opus 4.5 was found to consistently outclass rival models.

In early testing with Excel automation, Anthropic said its customers measured 20% accuracy improvements and 15% efficiency gains.

Anthropic emphasized these tangible improvements as a sign that the Claude model family has become a strong choice for various enterprise tasks, in addition to its code-generation pedigree.

With the launch of Opus 4.5, Anthropic sees the three models in the Claude family fulfilling distinct roles in the development lifecycle:

- Opus 4.5 is the go-to model for core agentic tasks and production code, with a focus on maximum sophistication and accuracy.

- Sonnet 4.5 is the model of choice for agents at scale, particularly customer-facing agents, as well as generating low latency code for iterative development.

- Haiku 4.5 is for businesses seeking to access a free tier to Claude, as well as for sub-agents.

Anthropic defines sub-agents as those with specific, pre-defined tasks, which agents don’t necessarily require its frontier model to accomplish.

Expanding on its computer use capabilities, Opus 4.5 will become available via a new Chrome extension, Claude for Chrome.

This will allow Max subscribers to let Claude take various actions across their browser.

"Claude Opus 4.5 represents a breakthrough in self-improving AI agents,” said Yusuke Kaji, GM of AI for Business at Rakuten.

“For automation of office tasks, our agents were able to autonomously refine their own capabilities—achieving peak performance in 4 iterations while other models couldn’t match that quality after 10.

“They also demonstrated the ability to learn from experience across technical tasks, storing insights from past work and applying them to new challenges like SRE operations."

New resilience to prompt injection

In addition to its benchmark improvements, Opus 4.5 was trained to be as trustworthy as possible and to defend against common prompt injection attacks launched against reasoning models.

When simulated attackers used 100 “very strong” prompt injection attacks, they saw a success rate of 63% against Opus 4.5 Thinking, compared to 87.8% against GPT-5.1 Thinking and 92% against Gemini 3 Pro Thinking.

When just one attack was used, just 4.7% of attacks succeeded versus 12.6% against GPT-5.1 Thinking and 12.5% against Gemini 3 Pro.

MORE FROM ITPRO

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not to

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not toNews The Copilot bug meant an AI summarizing tool accessed messages in the Sent and Draft folders, dodging policy rules

-

Cyber experts issue warning over new phishing kit that proxies real login pages

Cyber experts issue warning over new phishing kit that proxies real login pagesNews The Starkiller package offers monthly framework updates and documentation, meaning no technical ability is needed

-

Anthropic promises ‘Opus-level’ reasoning with new Claude Sonnet 4.6 model – and all at a far lower cost

Anthropic promises ‘Opus-level’ reasoning with new Claude Sonnet 4.6 model – and all at a far lower costNews The latest addition to the Claude family is explicitly intended to power AI agents, with pricing and capabilities designed to attract enterprise attention

-

Google says hacker groups are using Gemini to augment attacks – and companies are even ‘stealing’ its models

Google says hacker groups are using Gemini to augment attacks – and companies are even ‘stealing’ its modelsNews Google Threat Intelligence Group has shut down repeated attempts to misuse the Gemini model family

-

Anthropic reveals Claude Opus 4.6, an enterprise-focused model with 1 million token context window for extended code capabilities

Anthropic reveals Claude Opus 4.6, an enterprise-focused model with 1 million token context window for extended code capabilitiesNews The AI developer highlighted financial and legal tasks, as well as agent tool use, as particular strengths for the new model

-

Why Anthropic sent software stocks into freefall

Why Anthropic sent software stocks into freefallNews Anthropic's sector-specific plugins for Claude Cowork have investors worried about disruption to software and services companies

-

B2B Tech Future Focus - 2026

B2B Tech Future Focus - 2026Whitepaper Advice, insight, and trends for modern B2B IT leaders

-

What the UK's new Centre for AI Measurement means for the future of the industry

What the UK's new Centre for AI Measurement means for the future of the industryNews The project, led by the National Physical Laboratory, aims to accelerate the development of secure, transparent, and trustworthy AI technologies

-

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investment

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investmentNews Organizations are stymied by issues with security, privacy, and compliance, as well as the technical challenges of managing agents at scale

-

What Anthropic's constitution changes mean for the future of Claude

What Anthropic's constitution changes mean for the future of ClaudeNews The developer debates AI consciousness while trying to make Claude chatbot behave better