What is an NPU and what can they do for your business?

What are NPUs, how do they work, and why do 2024's business laptops have them?

READ MORE

Although we call the processors inside laptops and PCs "CPUs", that's far too simplistic. Inside each central processing unit sits a complex web of silicon comprising multiple chips, each with a set job to do. Now, a new type of chip has entered the frame: the neural processing unit, or NPU.

Well, a new-ish type of chip. The NPU has actually been around for some years. So what is an NPU? Why are they suddenly big news? And what difference could they make to business laptops in 2024?

What is an NPU?

Neural processing units are designed for one task: to run AI-related jobs locally on your computer. Take the simple example of blurring a background in video calls. It's perfectly possible for your main processor (or the graphics chip) in your computer to detect the edges of your face, hair, and shoulders, and then blur the background. But this is actually a task best done by an NPU.

The obvious follow-up question: why is an NPU better at this job? The main reason is that it's designed to run multiple threads in parallel and it thrives on large amounts of data. Video fits snugly into that description. So rather than a general-purpose processor – that is, your CPU – needing to solve the problem using algorithms and sheer brute force, it can offload the task to the NPU.

And because the NPU, with its lower power demands, is taking care of this task, it both frees up the CPU for other tasks and cuts down on the energy required.

What tasks can NPUs tackle?

While NPUs can tackle many tasks, the big one is generative AI. If you've used a service such as ChatGPT or Midjourney, then all the processing work happens in the cloud. AMD and Intel are betting big on what they're calling "AI PCs", where the workload shifts to the notebook or desktop PC instead. This isn't merely theory: it's perfectly possible to create AI art and ChatGPT-style conversations directly on a PC right now.

You don't even need a computer with an NPU inside to perform local generative AI tasks. For example, you can download Meta's Llama 2 open-source large language model today and run it on your computer. However, Llama 2 will be noticeably quicker and require less power on a PC with an NPU inside.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Why are NPUs suddenly hitting the news?

NPUs are not new. We've seen them in phones since 2017 when the Huawei Mate 10 used its AI-assisted Kirin 970 processor to perform automatic language translation when users pointed the phone's camera at text. The following year, Apple baked NPUs into its A12 processor, as found in the iPhone Xr, Xs, and Xs Max (and every iPhone since).

RELATED RESOURCE

Discover the benefits that a server refresh can have on your organization

DOWNLOAD NOW

In fact, if you bought a premium phone in the past five years, chances are that it included NPUs. They will help with everything from adding bokeh effects and analyzing songs to speech recognition.

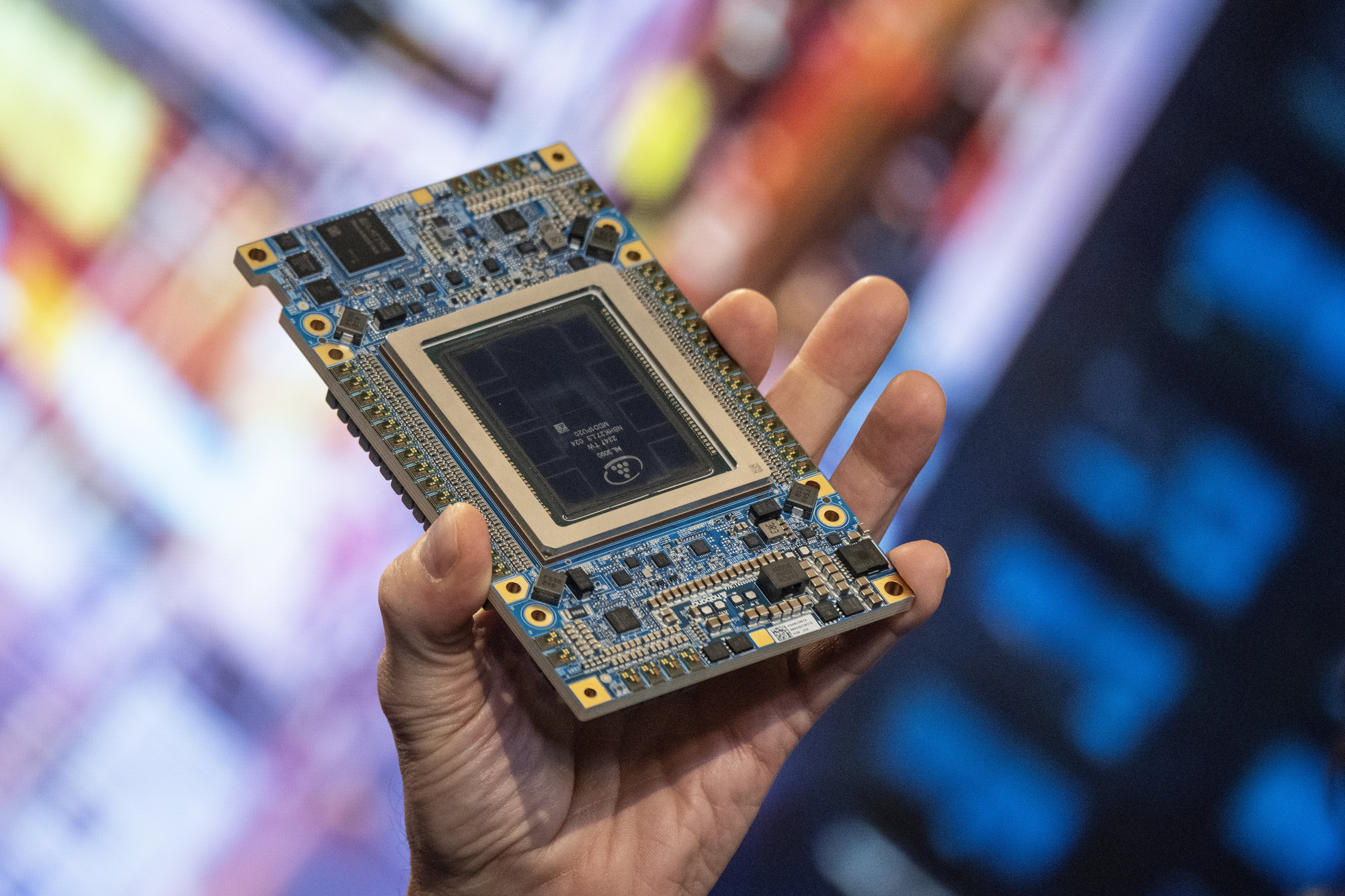

The reason why everyone is suddenly shouting about NPUs is because both AMD and Intel have released notebook processors that include neural processors. You'll find them in most of AMD's 7040 series and 8040 series chips (look out for "Ryzen AI"), while Intel announced in December that its Core Ultra processors also include NPUs.

There's a certain amount of marketing spin going on here, but both AMD and Intel are now pushing the concept of "AI PCs". That is computers – laptops in particular – that feature chips with NPUs inside.

What are AI PCs and when will they arrive?

AI PCs look identical to normal PCs. They're laptops, they're desktop machines, they're gigantic workstations. (Or at least they will be, once the chips are mainstream.) The only difference is that the processors inside include neural processors.

You can buy AI PCs right now, but you will have to hunt for them. For instance, the latest versions of Lenovo's Yoga Pro 7 14in and IdeaPad 5 Pro (14in and 16in) all feature AMD's Ryzen 7940 series chips with Ryzen AI inside. As do the most recent Acer Swift X 16 and Asus Vivobook Pro OLED models.

And you will find AMD's most powerful Ryzen 9 7940HS inside many high-end gaming laptops released in the second half of 2023. Laptops with Intel's Core Ultra chips will go on sale imminently, with many set to be announced at CES next week. Neither Intel nor AMD have yet announced desktop processors that feature NPUs, but we expect this to change during 2024.

How can NPUs help your business?

The biggest benefit of moving generative AI from the cloud to a local PC is privacy. Do you really want to upload your company's sensitive data to a nameless server? Microsoft has already announced that a version of its Copilot technology will run on local PCs in 2024, although details remain sparse.

There are latency advantages too, which is often the case when a task can be completed on a local system. Then there's the money factor. Often, generative AI services require a monthly subscription; switch to your processor, rather than one running in a data center, and that cost may well be reduced to zero.

Of course, all this is meaningless without services to choose from. This is why both AMD and Intel are wooing the likes of Adobe and DaVinci to offload tasks to NPUs rather than CPUs and graphics cards.

However, we have yet to hear of anything radical and new that can only be done on an NPU. At the moment, the chief benefits center around reducing the load on the main CPU. Perhaps 2024 will see a killer app that requires AI PCs, but we expect this to be – forgive the cliché – more about evolution than revolution.

What are the limitations of NPUs?

Without wishing to end on a downbeat note, it's important that business leaders are realistic about what can be achieved on standalone devices. For the moment, large language models on AI PCs will feature fewer parameters than the LLMs we see in the cloud because they simply don't have the processing heft.

The biggest hurdle, however, is availability. Until NPUs become ubiquitous on laptops and desktop PCs, we don't expect to see an explosion in software and services built to take advantage.

Tim Danton is editor-in-chief of PC Pro, the UK's biggest selling IT monthly magazine. He specialises in reviews of laptops, desktop PCs and monitors, and is also author of a book called The Computers That Made Britain.

You can contact Tim directly at editor@pcpro.co.uk.

-

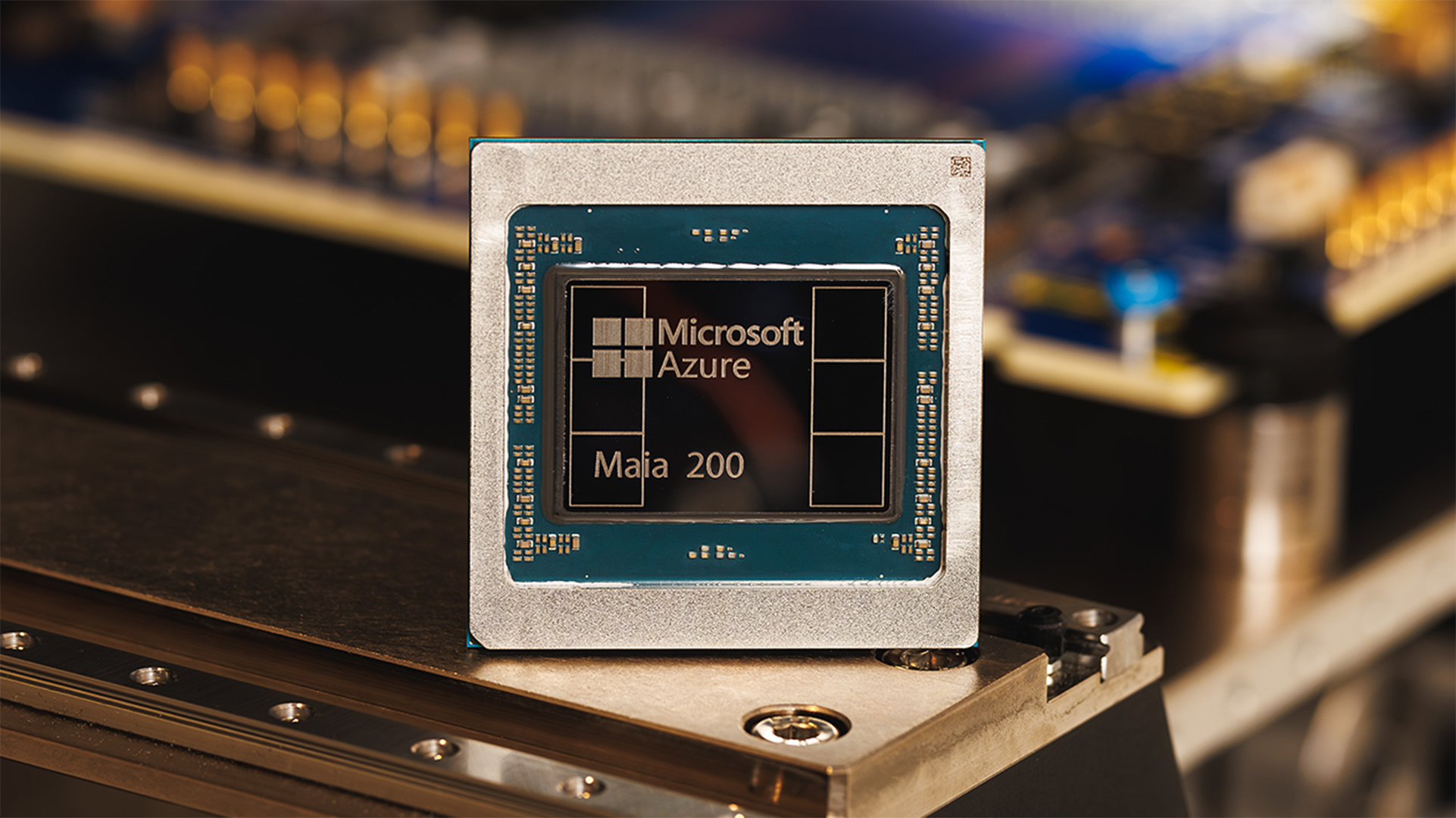

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation

-

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investment

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investmentNews Organizations are stymied by issues with security, privacy, and compliance, as well as the technical challenges of managing agents at scale

-

What Anthropic's constitution changes mean for the future of Claude

What Anthropic's constitution changes mean for the future of ClaudeNews The developer debates AI consciousness while trying to make Claude chatbot behave better

-

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industries

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industriesNews Microsoft CEO Satya Nadella appears confident that the AI market isn’t in the midst of a bubble, but warned widespread adoption outside of the technology industry will be key to calming concerns.

-

Workers are wasting half a day each week fixing AI ‘workslop’

Workers are wasting half a day each week fixing AI ‘workslop’News Better staff training and understanding of the technology is needed to cut down on AI workslop

-

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popular

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popularNews Companies are moving AI projects from pilot to production across the board, with a focus on open-source models and software, as well as agentic and physical AI

-

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issues

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issuesNews Microsoft CEO Satya Nadella might want the term "AI slop" shelved in 2026, but businesses will still be dealing with increasing output problems and poor returns.

-

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'News OpenAI details efforts to protect ChatGPT Atlas against prompt injection attacks

-

Google DeepMind CEO Demis Hassabis thinks startups are in the midst of an 'AI bubble'

Google DeepMind CEO Demis Hassabis thinks startups are in the midst of an 'AI bubble'News AI startups raising huge rounds fresh out the traps are a cause for concern, according to Hassabis