Why AMD could struggle to topple Nvidia’s AI lead

AMD faces major hurdles in its plan to compete with Nvidia, Intel, and even AWS

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

AMD has announced optimistic projections for the coming fiscal year, driven by commitments for its new AI chip platform, but its battle to overturn Nvidia’s AI market share is just beginning.

As the firm enters its final quarter of 2023, it has targeted strong growth in its data center chips range driven by increasing demand for the powerful hardware necessary to inference generative AI models.

Key to this is AMD’s MI300 series of graphics processing units (GPUs), with the MI300X in particular offering enterprises the performance they require.

Lisa Su, CEO at AMD, told investors that the company had projected $2 billion in revenue driven by MI300 sales in FY24, with $400 million in Q4 2023 alone. The news came amidst mixed results for the firm, with embedded and gaming segments seeing 5% and 8% against its client segment revenue’s 42% year-on-year rise.

“We delivered strong revenue and earnings growth driven by demand for our Ryzen 7000 series PC processors and record server processor sales,” said AMD Chair and CEO Dr. Lisa Su.

“Our data center business is on a significant growth trajectory based on the strength of our EPYC CPU portfolio and the ramp of Instinct MI300 accelerator shipments to support multiple deployments with hyperscale, enterprise and AI customers.”

AMD’s data center division saw flat revenue across Q3, which the firm pinned on diminishing sales of system-on-chip (SoC) products. But it believes this division can be a major source of growth, with rapid uptake of enterprise generative AI driving fierce demand in the space.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Without providing names, the firm stated that it is already ramping up shipments of MI300 chips in response to purchases from hyperscale and enterprise customers.

LLMs such as OpenAI’s GPT-4 or Meta’s Llama 2 contain billions of parameters, and training or inferencing models of this size is only achievable for most firms by paying to use bespoke hardware from providers like Nvidia and AMD.

AMD targets AI battle with Nvidia

As in the gaming space, AMD’s main competitor in the AI hardware space will be Nvidia. The chips giant has had a bumper year, with its Hopper-based H100 chips becoming sought after for the training and inference of large language models (LLMs).

In terms of raw performance, AMD’s MI300X chip can be configured for up to 192GB of HBM3 and 5.2TB/s of memory bandwidth which the firm says is sufficiently powerful to handle an 80-billion parameter model on a single GPU instance.

It compares favorably with Nvidia’s H100, each instance of which comes packed with 188GB HBM3 memory and a peak memory bandwidth of 3TB/s.

But Nvidia’s next-generation GH200 chip, built in anticipation of frontier AI models with a trillion parameters, is expected to be released in Q2 2024 and will present AMD with a tough standard to beat.

RELATED RESOURCE

Streamline your organization with business continuity management

Comparing individual chips only gives part of the picture when it comes to each company’s ability to handle massive AI workloads.

Both firms have respective technologies that allow for individual GPUs to be linked in order to tackle the largest compute challenges. Nvidia’s NVLink connects H100s to form the 640GB memory DGX H100 and eventually GH200s to form the 144TB memory DGX GH200.

AMD’s Infinity Architecture Platform, meanwhile, can combine up to eight MI300X chips for a combined 1.5TB memory.

"The biggest stumbling block that any vendor will need to overcome to compete with NVIDIA in AI is the install base of NVIDIA’s CUDA development environment," James Sanders, principal analyst, cloud and infrastructure at CCS told ITPro.

"Multiple vendors have attempted to compete on cost, with startups like Graphcore struggling as the infrastructure savings for lower-cost / higher-performance AI acceleration does not outweigh the technical friction introduced by adopting these alternatives.

"Wider adoption of open-source libraries (e.g., PyTorch) will ease this for inference, though training is likely a sticker problem. Even with corporate-sponsored collaboration in displacing CUDA using open-source tooling, bringing alternatives to feature parity and training scientists in how to use alternatives a multi-year endeavor."

Where customers place their workloads will come down to a range of factors. However, it’s clear that when it comes to sheer performance AMD has reason for confidence, but it will have to offer competitive pricing if it wants to capture a slice of the market already using Nvidia’s technology.

The firm would benefit from a partnership with prominent firms, but could struggle as Nvidia has already partnered with Dell, as well as Snowflake, Microsoft, and Oracle.

Other contenders in the space

AMD will not only have to contend for space in the AI market with Nvidia, but a number of other potential competitors.

AWS has leaned heavily into its own family of AI chips, Inferentia and Trainium, and is set for a collision of its own with Nvidia. With a focus on its internal capability to serve the compute needs of its clients, AMD will have to look at the other two of the ‘big three’, Microsoft and Google, if it hopes to score a decisive victory among the largest in the AI space.

Customers of the Amazon Bedrock platform could see little reason to move outside of the AWS ecosystem, though the firm’s commitment to open AI development could give AMD space for third-party inference of models.

Intel wants to achieve AI dominance by 2025, and has specifically aimed to corner the market when it comes to data center processing.

Its Gaudi 2 GPUs were specifically designed with LLMs in mind, and the chip was specifically praised by Hugging Face machine learning (ML) engineer Régis Pierrard for its superior performance over Nvidia’s A100 GPU.

Intel has also announced a wide range of hardware products aimed at capturing the AI market outside of CPUs, including its Granite Rapids and Sierra Rapids CPU family and field programmable gate arrays (FPGAs).

Whether AMD can face off against more than one of its long-time competitors in order to find success in the AI space will depend on the versatility of its GPU lineup, and the ease and price of access for its services.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Will a generative engine optimization manager be your next big hire?

Will a generative engine optimization manager be your next big hire?In-depth Generative AI is transforming online search and companies are recruiting to improve how they appear in chatbot answers

-

European Commission clears Google’s Wiz acquisition, citing 'credible competition' from Amazon and Microsoft

European Commission clears Google’s Wiz acquisition, citing 'credible competition' from Amazon and MicrosoftNews Regulators said there are “several credible competitors” to Google regardless of the acquisition

-

HPE and Nvidia launch first EU AI factory lab in France

HPE and Nvidia launch first EU AI factory lab in FranceNews The facility will let customers test and validate their sovereign AI factories

-

AI Infrastructure for Business Impact: Enabling Agentic Intelligence with Scalable Compute

AI Infrastructure for Business Impact: Enabling Agentic Intelligence with Scalable Computewhitepaper

-

Dell Technologies doubles down on AI with SC25 announcements

Dell Technologies doubles down on AI with SC25 announcementsAI Factories, networking, storage and more get an update, while the company deepens its relationship with Nvidia

-

The role of enterprise AI in modern business

The role of enterprise AI in modern businessSupported Artificial intelligence is no longer the stuff of science fiction; it's a powerful tool that is reshaping the business landscape

-

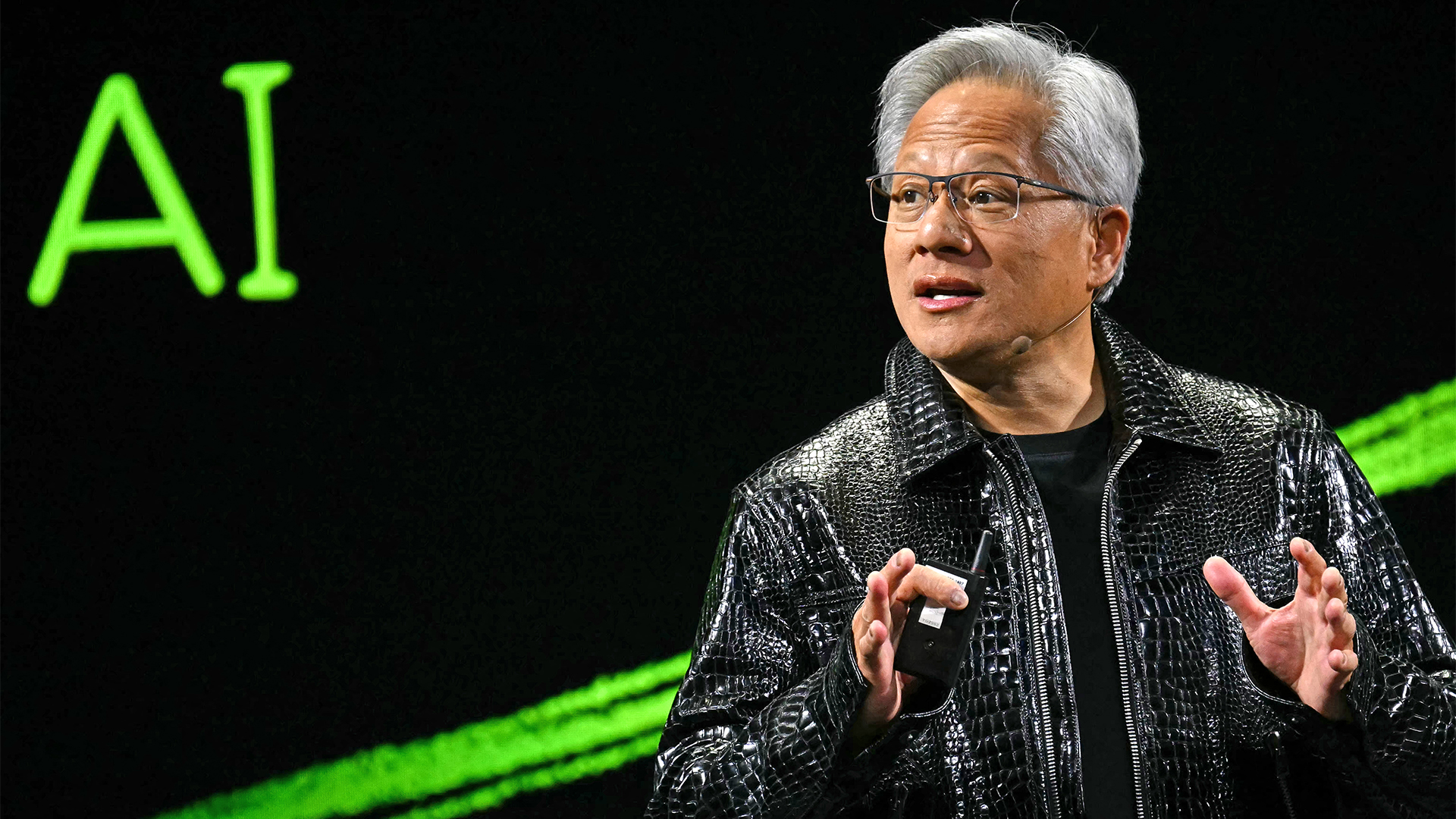

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.News Enterprise workforces of the future will be made up of a "combination of humans and digital humans," according to Nvidia CEO Jensen Huang. But how will humans feel about it?

-

OpenAI signs another chip deal, this time with AMD

OpenAI signs another chip deal, this time with AMDnews AMD deal is worth billions, and follows a similar partnership with Nvidia last month

-

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companies

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companiesNews OpenAI will use Nvidia chips to build massive systems to train AI

-

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concerns

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concernsNews The Nvidia chief exec appears upbeat on the future of the AI market despite recent concerns